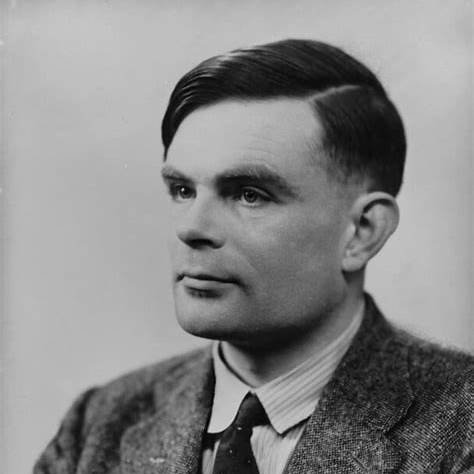

Artificial intelligence did not burst onto the scene fully formed in the 1950s; its roots stretch back to a quiet but extraordinary 36-page paper published in 1936 by a 24-year-old mathematician named Alan Turing. In “On Computable Numbers, with an Application to the Entscheidungsproblem,” Turing introduced an abstract device—the Turing Machine—that would become the conceptual bedrock of modern computing and, by extension, of AI.

A Radical Answer to Hilbert’s Challenge

Turing’s motivation was David Hilbert’s Entscheidungsproblem: could there be a mechanical procedure to decide the truth of every mathematical statement? Turing reframed the question by asking what it means to calculate at all. He imagined a machine that reads and writes symbols on an infinite tape, moving left or right one square at a time under the control of a finite set of rules. With this simple model he proved there exist well-posed problems no machine can solve, delivering a negative answer to Hilbert.

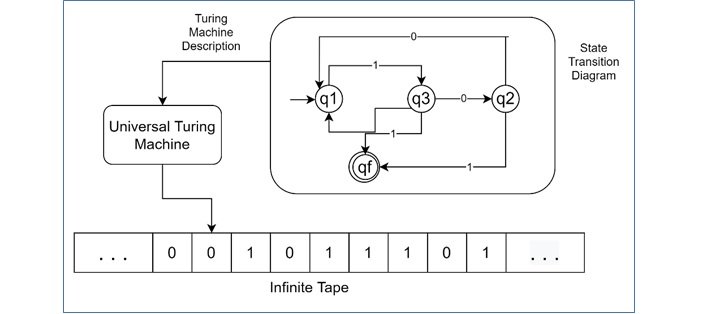

The Birth of Universality

The truly revolutionary step was Turing’s realization that a single “universal” machine could simulate any other machine simply by reading its description from the tape. In one stroke he separated hardware from software and planted the idea of a general-purpose computer—an intellectual leap that still underpins everything from smartphones to supercomputers. The universal Turing machine formalized the notion of programmability decades before microprocessors existed.

Blueprints for a Programmable Future

Because the universal machine could, in principle, implement any effective procedure, it provided a mathematical definition of computation itself. Later AI pioneers—John McCarthy, Marvin Minsky, Allen Newell, and Herbert Simon—built symbolic reasoning systems on computers whose lineage traces straight back to Turing’s tape-and-head abstraction. Early AI languages such as LISP and IPL implemented symbolic manipulations that Turing had already proved were mechanically realizable.

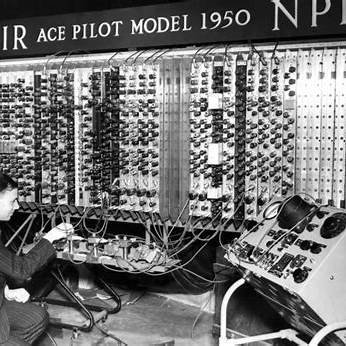

From Thought Experiment to Hardware

During World War II Turing applied his theoretical insights to real machines, designing electro-mechanical “bombes” that broke the German Enigma cipher. After the war he proposed the Automatic Computing Engine (ACE), one of the first stored-program electronic computers. Although the full ACE was never built in his lifetime, pilot versions ran successfully and directly influenced British computing efforts. These physical embodiments of the Turing Machine concept demonstrated that abstract universality could be engineered in silicon (or, at the time, in vacuum tubes).

Foreshadowing Artificial Intelligence

In 1950, Turing’s celebrated essay “Computing Machinery and Intelligence” asked whether machines could think, inaugurating the field of AI and proposing the now-famous Turing Test as an operational criterion. Yet the conceptual groundwork had been laid 14 years earlier: if any computation could be encoded on a tape, why not cognition itself? The logic of symbol manipulation, learning rules, and even neural networks (Turing’s 1948 “unorganised machines”) all flow naturally from the universal-machine idea.

Enduring Legacy

Turing’s 1936 paper provided:

- A precise model of computation—still taught as the standard against which the power of real machines is measured.

- The concept of programmability—enabling software to outlive hardware generations.

- A philosophical lens—suggesting cognition could be reduced to symbol processing, a premise explored by both classical AI and today’s large language models.

As modern AI systems crunch petabytes of data in cloud-scale clusters, they remain, at their core, elaborate descendants of Turing’s imaginary machine. Every line of code, every neural network weight update, is ultimately a sequence of discrete operations a universal Turing machine could emulate.

Conclusion

Calling the Turing Machine the “seed” of AI is no mere historical flourish. It is the mathematical DNA from which the entire computational ecosystem evolved. By abstracting the act of calculation into a simple tape and head, Turing gave us not only a solution to a logic puzzle but a vision of machines that learn, reason, and perhaps one day converse as naturally as we do. For anyone tracing the arc of artificial intelligence, the journey begins in 1936, with a young mathematician sketching blueprints for thinking machines on an infinite strip of paper.