In the late 1950s, John McCarthy—a mathematician fresh from co-organising the Dartmouth Conference—decided that existing languages were ill-suited to the symbolic reasoning at the heart of artificial intelligence. His answer was LISP (LISt Processing), a language that treated code and data as the same nested list structure. In doing so, he gave AI researchers a malleable laboratory for ideas and launched a family of languages that still shape research today.

From AI Thought Experiment to Working Code

McCarthy first sketched LISP at MIT in 1958, borrowing the list structures of Newell and Simon’s IPL but grounding them in mathematical lambda calculus. The breakthrough came when he showed that the entire evaluator—eval—could be written in LISP itself, making the language meta-circular and infinitely extensible. He formalised these insights in his landmark 1960 paper “Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I,” which introduced S-expressions, garbage collection, and the idea that a universal apply function in software could play the role of a Turing Machine.

The First Interpreter and the Birth of Garbage Collection

Implementation was swift. Graduate student Steve Russell realised that McCarthy’s meta-evaluator could run as machine code on an IBM 704 and promptly wrote the first interpreter—much to McCarthy’s astonishment. Because lists were created and discarded at runtime, Russell and McCarthy also pioneered automatic garbage collection, freeing programmers from manual memory management and inspiring modern managed runtimes from Java to Swift.

Running on a room-sized 704, early LISP could read symbolic algebra, differentiate expressions, and manipulate logical formulas—tasks well beyond the numeric focus of FORTRAN. These demonstrations convinced many that symbolic AI demanded its own linguistic tools.

Why AI Fell in Love with LISP

- Homoiconicity – Programs are lists; lists are data. This made it trivial to write programs that write programs, a critical capability for theorem provers, expert systems, and—decades later—meta-learning research.

- Macros & DSLs – Compile-time macros let researchers embed domain-specific languages for planning, natural-language dialogue, or vision pipelines without leaving LISP.

- Interactive REPL – The read–eval–print loop fostered exploratory coding, allowing scientists to tweak rules and see immediate effects.

- Portability of Ideas – Because the core semantics are tiny (

eval+ list primitives), variants such as Maclisp, ZetaLisp, Scheme, and later Common Lisp could evolve while sharing a common intellectual DNA.

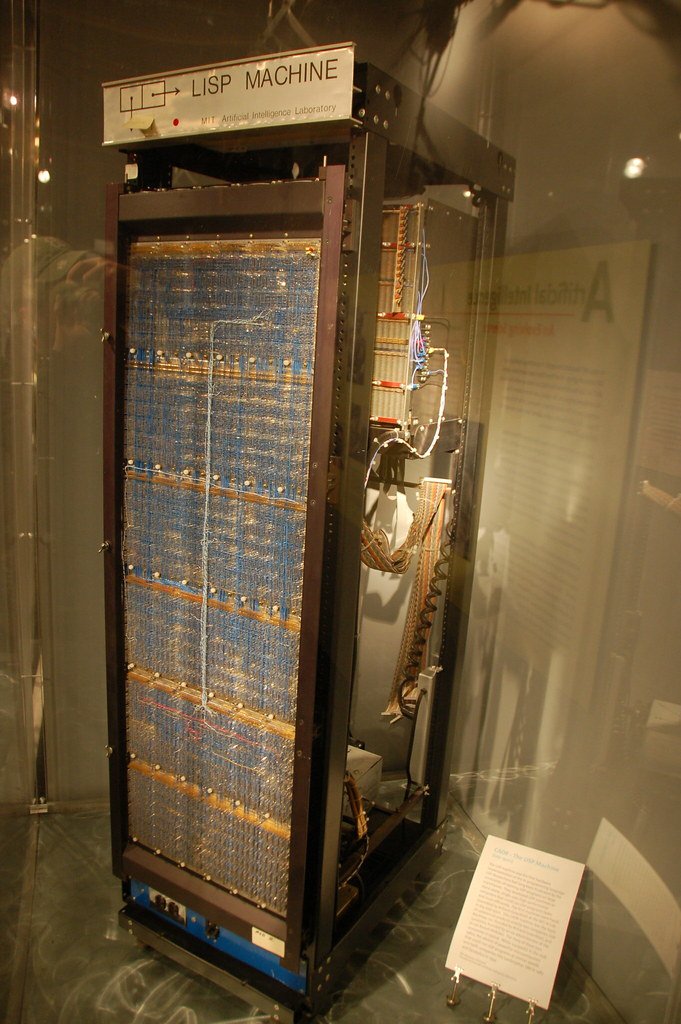

Throughout the 1960s and 70s, MIT, Stanford, and Xerox PARC all ran on LISP. Entire machines—the CADR and Symbolics 3600—were built to execute it natively, offering bit-mapped graphics, networking, and windowed GUIs years before PCs caught up.

Lasting Footprints in Modern Computing

Although the AI “winter” of the late 1980s cooled commercial enthusiasm, LISP concepts permeated mainstream software:

- Garbage collection underpins Java, C#, Go, and many scripting languages.

- Lambda expressions and higher-order functions in Python, JavaScript, and Kotlin echo LISP’s functional core.

- Macro systems in Rust and Elixir owe a debt to LISP’s code-as-data philosophy.

- Modern AI libraries such as TensorFlow’s graph construction or PyTorch’s dynamic tracing revive the idea of programs manipulating programs at runtime.

Even today, Common Lisp and Clojure power research prototypes, trading systems, and aerospace missions. The language’s resilience stems from the same qualities McCarthy prized: minimal syntax, maximal abstraction, and an evaluator that you can read, modify, or replace.

Conclusion

McCarthy once quipped that “LISP is a ball of mud,” meaning you can stick new features on without breaking the old ones. That plasticity made it the default tongue of early AI and a silent partner in every generation since. From garbage-collected runtimes to interactive notebooks, echoes of LISP shape the tools we use to build today’s machine-learning models. Understanding its origins is more than nostalgia—it’s a reminder that the most enduring innovations often start as elegant theories expressed in just a few pages of code.