When psychologist-turned-computer-scientist Frank Rosenblatt unveiled the Perceptron in July 1958, reporters hailed it as “the first machine capable of original thought.” Behind the fanfare stood a deceptively simple learning algorithm that would launch neural-network research, fade into obscurity, and later inspire today’s deep-learning renaissance.

From Cornell’s Labs to the IBM 704

Rosenblatt, then at the Cornell Aeronautical Laboratory, set out to model how biological neurons combine to recognize patterns. He coded his algorithm on an IBM 704, feeding punch cards that represented black-and-white images. Within 50 trials the program learned to tell left-marked cards from right-marked ones—an early glimpse of supervised learning.

To prove the concept could scale beyond code, Rosenblatt and engineers built the Mark I Perceptron: a room-sized contraption whose 400 light-sensitive cells connected (via a tangle of analog wires) to “association units” that could be electrically re-weighted. The machine’s hardware embodied the algorithm’s weights, foreshadowing today’s AI accelerators.

How the Perceptron Learns

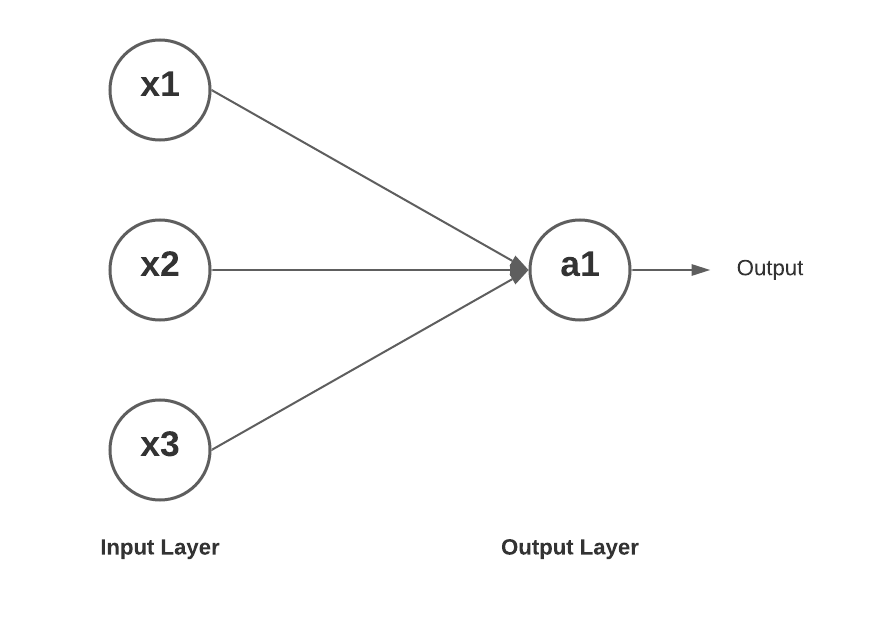

At its core the perceptron is a single-layer neural network. Each input $x_i$ is multiplied by a weight $w_i$; the weighted sum passes through a step function to yield a binary output. During training, weights update by a simple rule:

$w_i \leftarrow w_i + \eta (y-\hat{y}) x_i$

where $y$ is the true label and $\hat{y}$ the prediction. If the output is wrong, weights shift toward the correct answer; if right, they stay put. Rosenblatt proved that if the data are linearly separable, this rule will eventually find a perfect classifier—a landmark convergence theorem.

Note: Want the full mathematical derivation and Rosenblatt’s original figures? See our deep-dive post “Inside Rosenblatt’s 1958 Perceptron Paper” for line-by-line commentary and downloadable proofs.

Promise: Machines That Could “See”

Rosenblatt predicted perceptrons would read handwriting, translate speech, and even pilot spacecraft. The U.S. Office of Naval Research funded the work, imagining battlefield image recognizers. Popular magazines hyped an imminent age of electronic brains, and the term “perceptron” briefly eclipsed “computer” in tech columns

Limits: A Single Neuron Isn’t a Brain

Reality struck in 1969 when Marvin Minsky and Seymour Papert published Perceptrons, proving that a single-layer network cannot model functions as simple as XOR or detect whether two bits are equal. Their rigorous math chilled funding, launching the first “AI Winter.” Rosenblatt himself never accepted the critique—he was already experimenting with multi-layer variants—but his untimely death in 1971 cut that work short.

Legacy: Foundations for Deep Learning

Although eclipsed for two decades, the perceptron’s ideas resurfaced as computing power grew and back-propagation solved the multi-layer training problem in the 1980s. Today, every neuron in a convolutional or transformer model is a lineal descendant of Rosenblatt’s threshold unit. Even the modern terminology—weights, learning rate, training set—owes its origin to his 1958 paper.

The perceptron also advanced cognitive science: Rosenblatt framed learning as statistical inference, bridging psychology and engineering. His probabilistic view now underlies reinforcement learning and Bayesian neural nets.

Why the Perceptron Still Matters

- Historical Milestone – First working demonstration of machine learning on real data.

- Algorithmic Simplicity – A teaching tool that captures the essence of gradient-based learning without calculus.

- Cautionary Tale – Shows how hype cycles and theoretical critiques can stall promising research, a lesson echoed in today’s debates over large language models.

Conclusion

Frank Rosenblatt’s perceptron proved that machines could learn from experience—a radical claim in 1958 that now feels obvious. Its initial shortcomings spurred deeper theory, eventually leading to networks thousands of layers deep that classify images, translate languages, and generate code. By revisiting the perceptron we trace the intellectual DNA of modern AI back to a single-layer circuit of weighted wires and flashing bulbs—a reminder that even today’s most complex systems rest on elegant, intuitive beginnings.